Software Paradigm Change

Hold on to your hats, folks, because we’re about to take a deep dive into the incredible world of software development! Thanks to the amazing possibilities that Large Language Models (LLMs) like OpenAI’s ChatGPT and GPT-4 technology have opened up, we’re entering a whole new paradigm of software engineering that promises to be faster, cheaper, and more efficient than ever before.

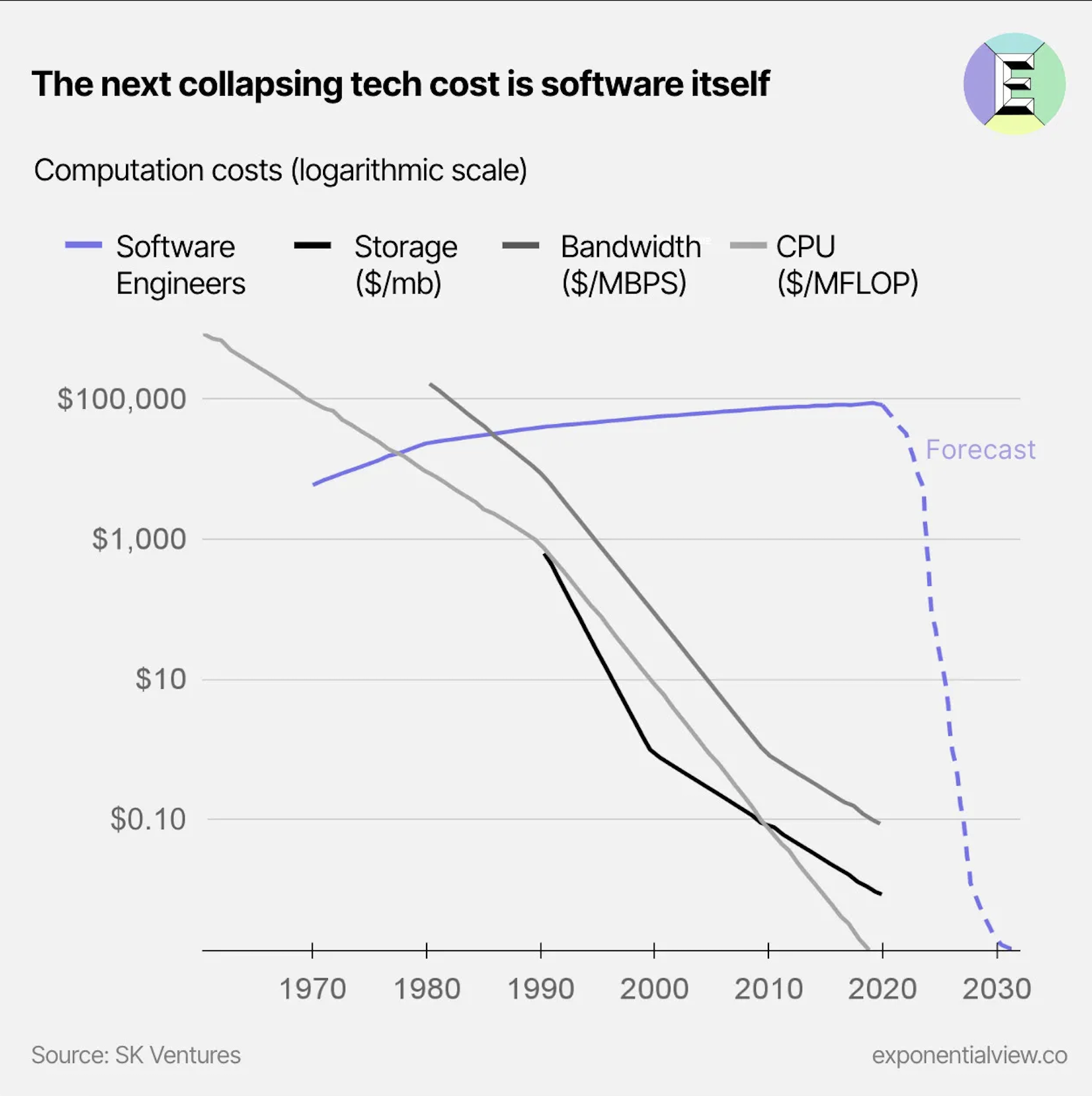

For starters, check out this mind-blowing graph that predicts a massive drop in software engineering costs over the next couple of years! And the reason for this? It’s mainly down to the incredible code generation capabilities of ChatGPTs and Co-pilot-X-like solutions. Even Sequoia, one of the biggest players in the VC game, is getting in on the action and predicting a whole new generation of developer products that will replace the old pre-AI models.

But here’s the thing: I believe that the impact of code generation is just the tip of the iceberg. In fact, I think that traditional software development methods might soon become a thing of the past.

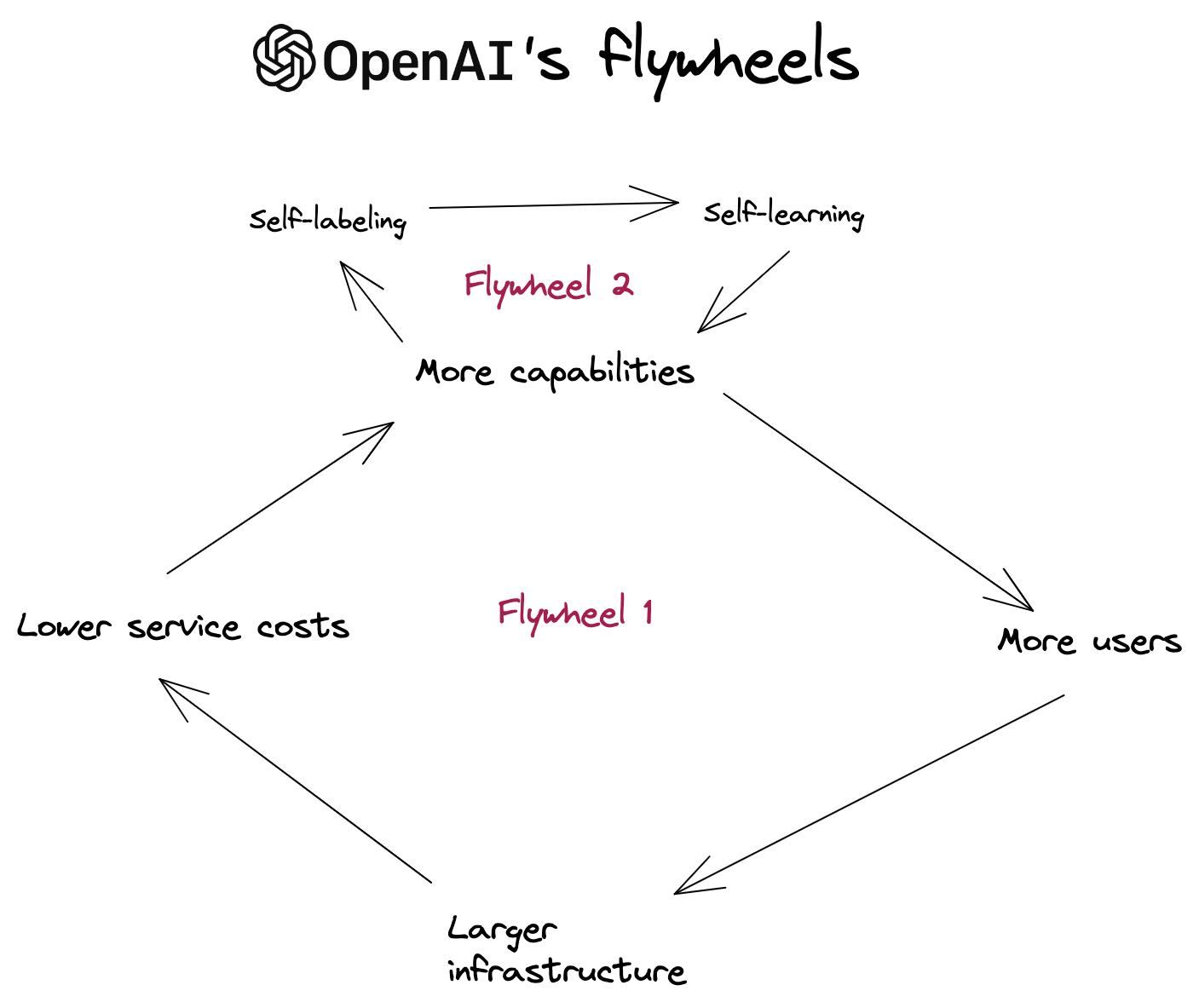

Why? Well, let’s take a look at OpenAI’s flywheel.

OpenAI has two flywheels:

- How to make the service cheaper?

- How to improve the capabilities further?

Those flywheels are trying to achieve:

- If OpenAI improves its capabilities, it will attract more users, which means they’ll need to invest more in infrastructure and make scalable systems, ultimately decreasing the cost of service.

- Meanwhile, OpenAI can improve its capabilities by labeling more data with their technology, improving the learning rate, and enhancing the capabilities further.

So, how will this impact software costs directly?

To answer that, let’s take a look at the different paradigms of software development.

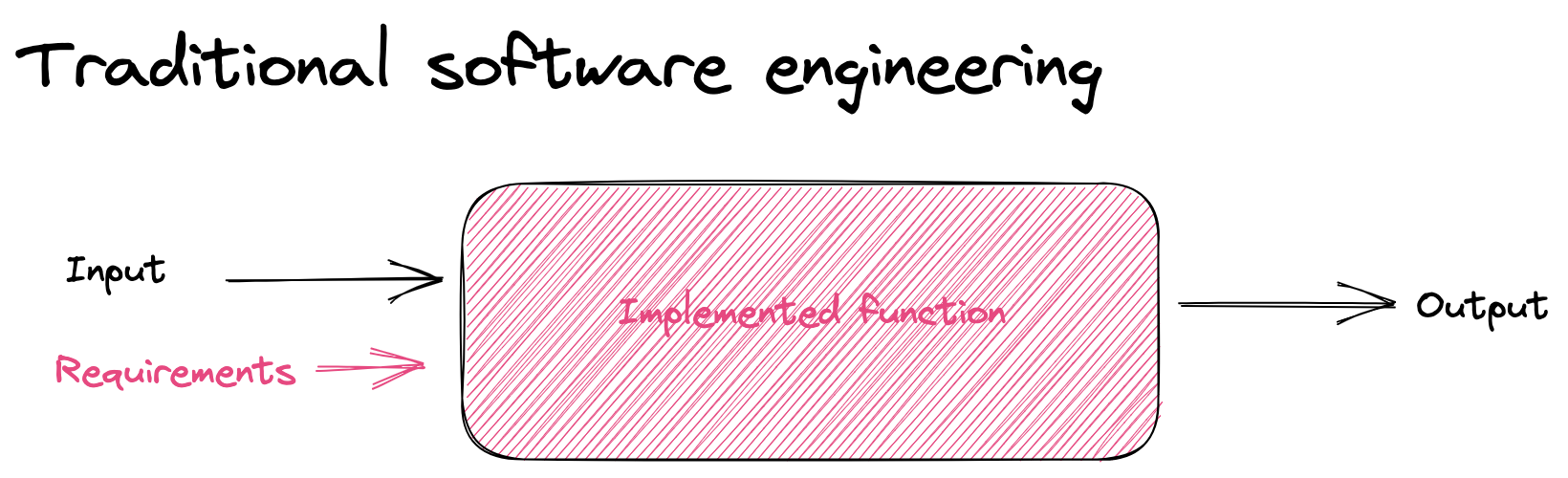

Traditional software engineering involves a developer getting requirements from a product manager/owner/designer and implementing a function that generates an output.

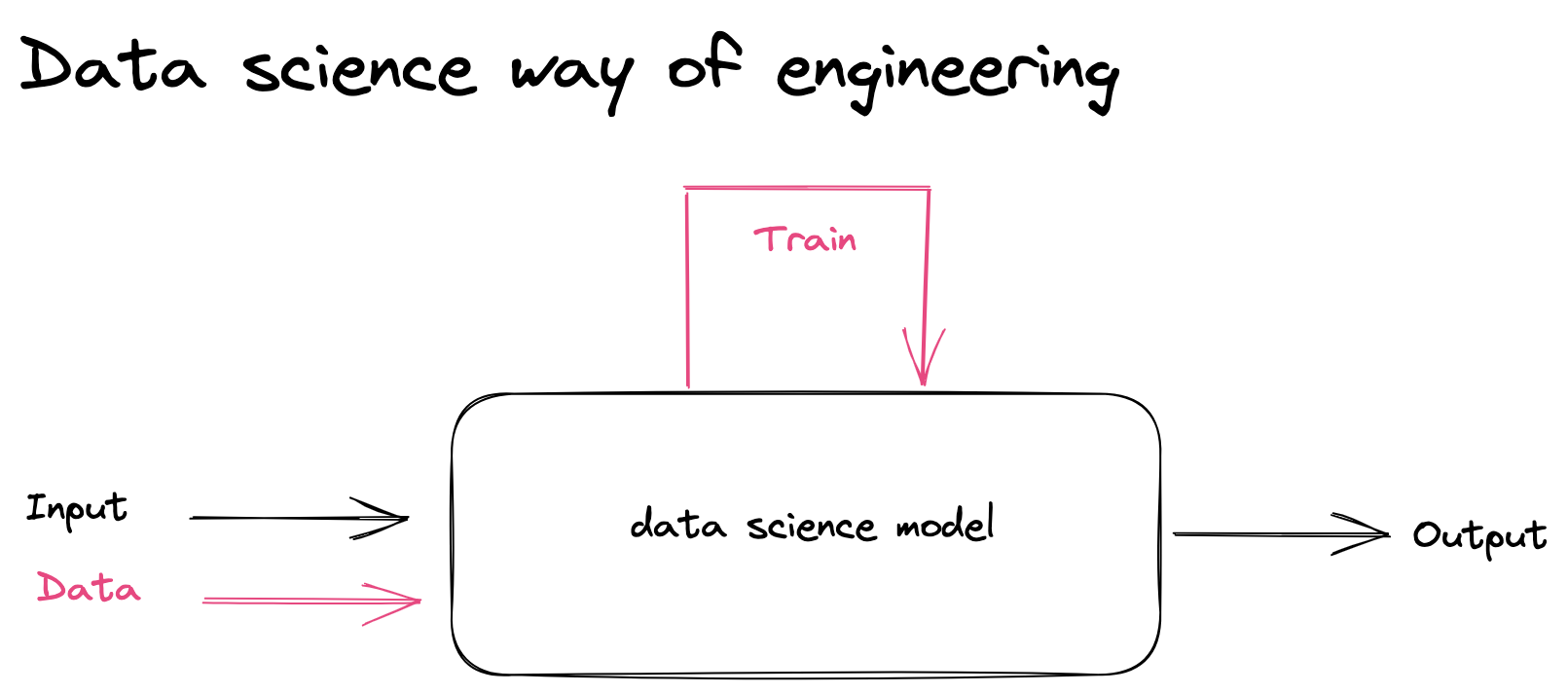

With the data science approach, we

With the data science approach, we provide real-life example data to train models that generate outputs based on the given input and model. This approach was superior to traditional software engineering, but it required a lot of data sets and training resources.

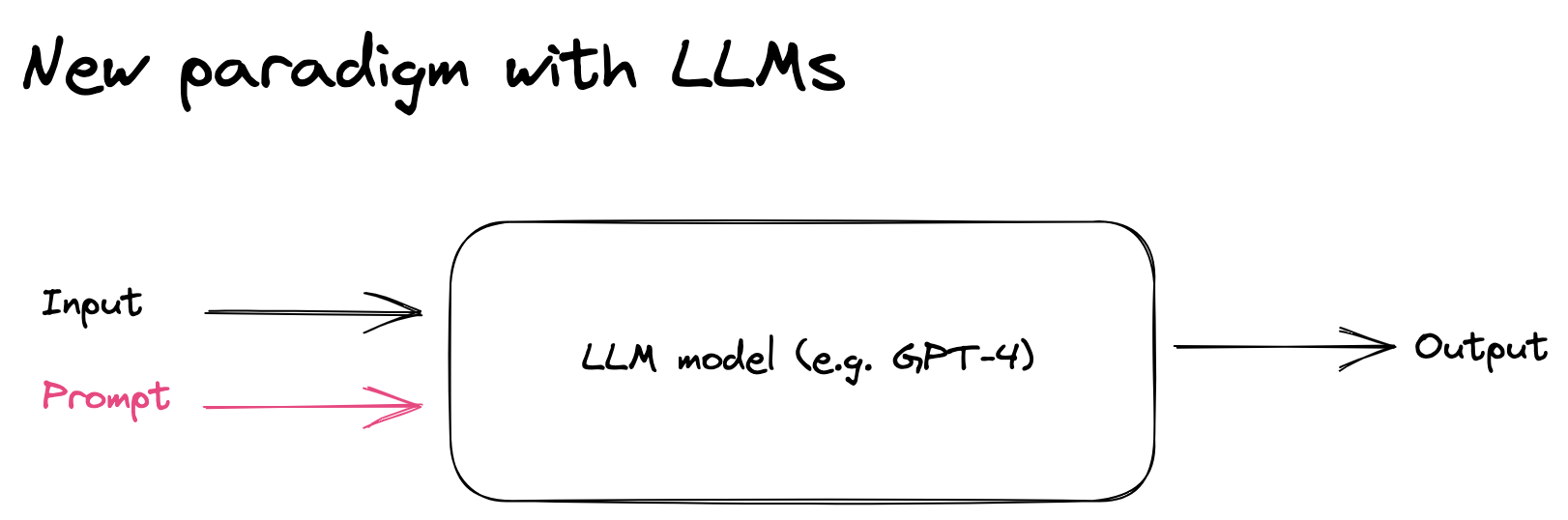

With the latest paradigm shift of LLMs, we don’t need to implement software or train an algorithm or provide our data set for learning purposes. We only need to provide

With the latest paradigm shift of LLMs, we don’t need to implement software or train an algorithm or provide our data set for learning purposes. We only need to provide prompts to the model, and the model can give us output - that’s it. We can write the requirements in a more structured way and call OpenAI’s APIs.

So, how does this decrease the cost of software development?

- LLMs decrease the dependency on traditional programmers. Anyone with requirements can start building software by providing requirements via prompts, which means fewer people are needed.

- As we will use the LLM directly from the Open AI, we don’t need to deploy code into servers like traditional software engineering or data science, which means less implementation.

- We don’t need to provide our own data sets. Labeling data and extending the capabilities of GPT can be a self-fulfilling prophecy, as recent research has shown that ChatGPT overperforms mechanical turks in text-annotation tasks, which means better functionality.

- We don’t have to worry about scalability or maintainability as OpenAI takes care of that, which means less overhead.

Of course, I’m simplifying many topics in this article, such as how we’ll store data, ensure security, and build complex software. But it’s clear that we’re entering a new realm of software engineering that has the potential to change the way we store data, build software, and think about programming in general. It’s an exciting time to be in the industry!

My thoughts and take-aways:

- I believe that software manufacturing cost will decrease over the next years.

- As a result of the paradigm shift, we may see the emergence of new roles such as “product engineers” who use prompts and no-code tools to engineer the future of software.

- If this shift happens, the importance of DevOps may decrease, or it may become specific to larger companies looking to optimize resources. We can already see this trend with companies like vercel.com.

- Until recently, technology was the primary defensibility factor for organizations, but it may shift towards distribution and pricing.

Next article, I will cover the economical impact of the AI and how countries already start to worry about it. Also, if you have not read my previous article on ChatGPT plugins and how OpenAI can create monopoly, please read it here.

🎩 The ideas and frameworks are mine, but article is partially written with support of GPT-4.