AI Practitioner Guide: What is AI and Foundational Models? | Chapter 1

In the past 14 months, the term “AI” has transitioned from a tech buzzword to an integral part of our daily lives. This journey began with the advent of ChatGPT, followed by the creative explosion of Stable Diffusion (Dreambooth), where people transformed themselves into digital avatars. The landscape is now dotted with numerous AI-driven services, reshaping our interaction with technology.

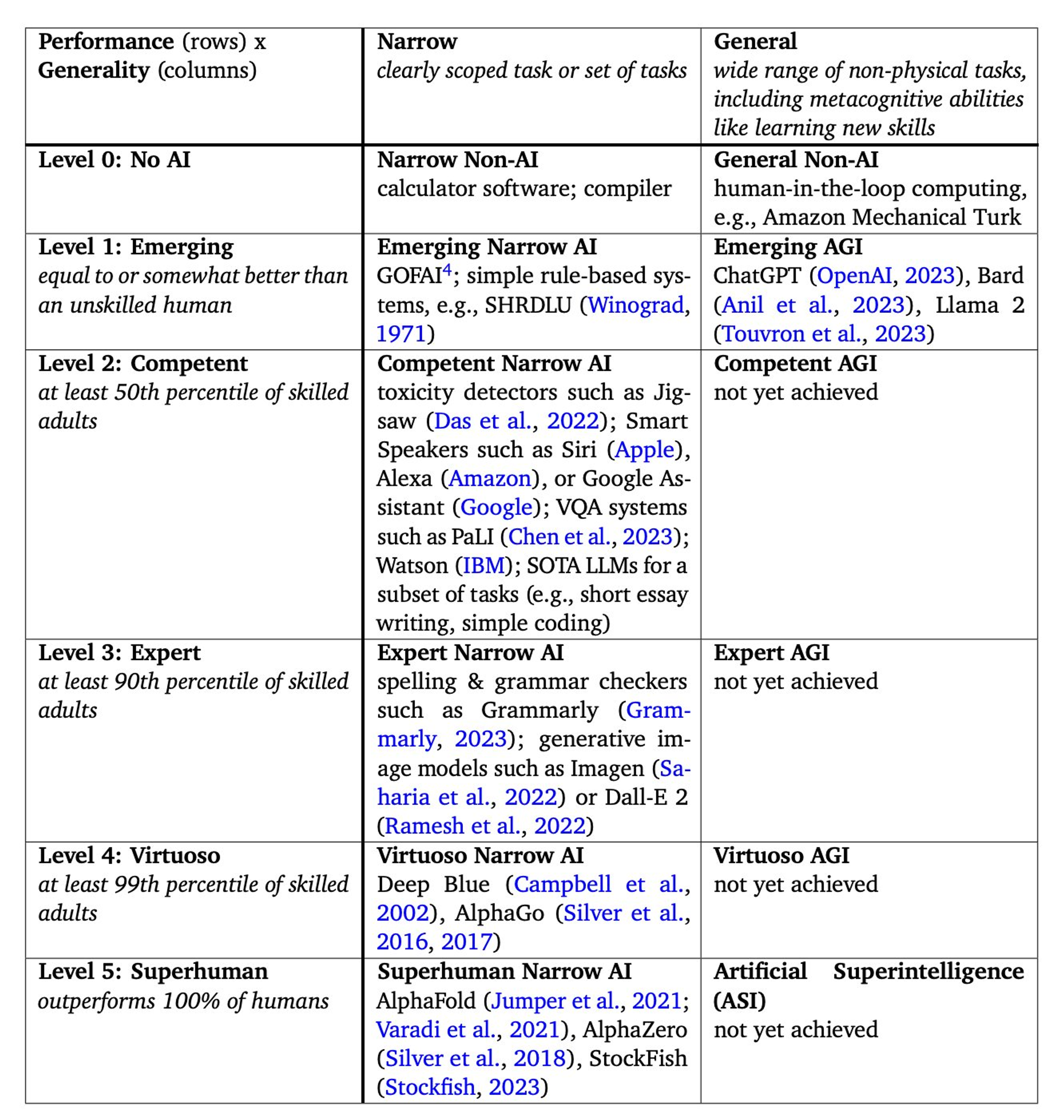

Before delving deeper, it’s crucial to distinguish between two key concepts in AI: Artificial Narrow Intelligence (ANI) and Artificial General Intelligence (AGI).

Understanding AGI and ANI

Ilya Sutskever, a prominent figure at OpenAI, defines AGI as “digital brains that live inside of our computers will be as good as or even better than our own biological brains, computers will become smarter than us”. This definition underscores the vast potential and transformative capabilities of AGI.

The following “Levels of AI” matrix simplifies the distinction between ANI and AGI. It sets Narrow AI against General AI: the former focuses on specific tasks, while the latter aims for a broader scope, including learning abilities.

The Practical Impact of Advanced AI

The evolution of Large Language Models (LLMs) brings us tantalizingly close to achieving “competent” or even “expert” AI levels. This advancement suggests the possibility of AI systems performing at or above the level of an average human employee in various industries.

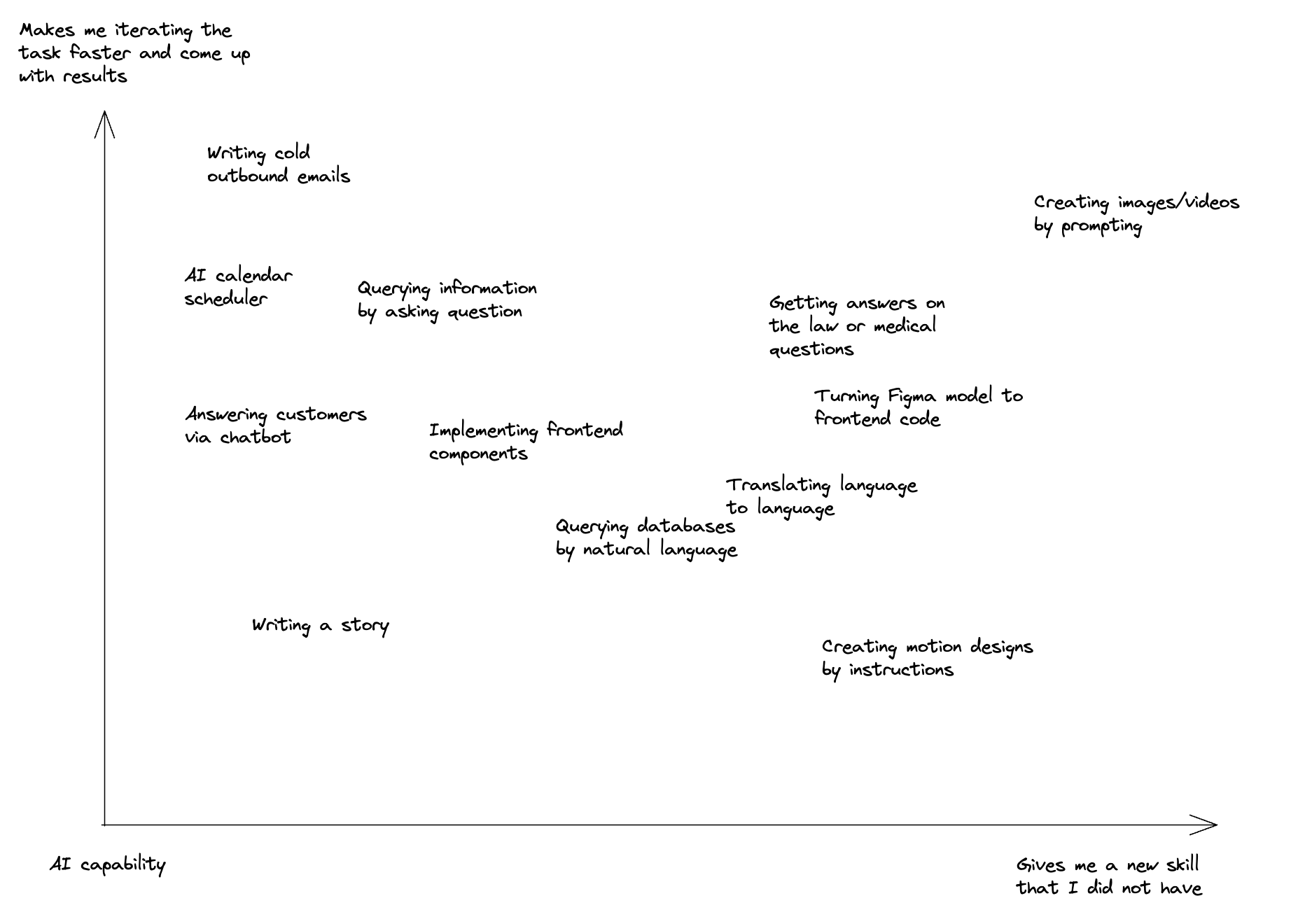

This leap forward opens a myriad of new doors, equipping us with tools that can accelerate task completion and enhance our skill sets. AI now aids in tasks ranging from writing and scheduling to complex problem-solving. It can help draft emails, schedule meetings with AI calendar assistants, and even provide customer service through chatbots. By querying information or translating languages, AI serves as an ever-present assistant, ready to tackle a variety of challenges.

The capabilities extend further to specialized tasks like converting Figma designs into front-end code or generating images and videos based on prompts. AI’s versatility is not just about completing tasks but also about learning new skills and creating solutions that were previously inaccessible or time-consuming.

As AI continues to advance, its integration into our professional and personal lives will likely become more seamless, making these tools indispensable for modern workflows.

Yet, a common question arises:

What exactly is AI?

Decoding AI and Foundational Models

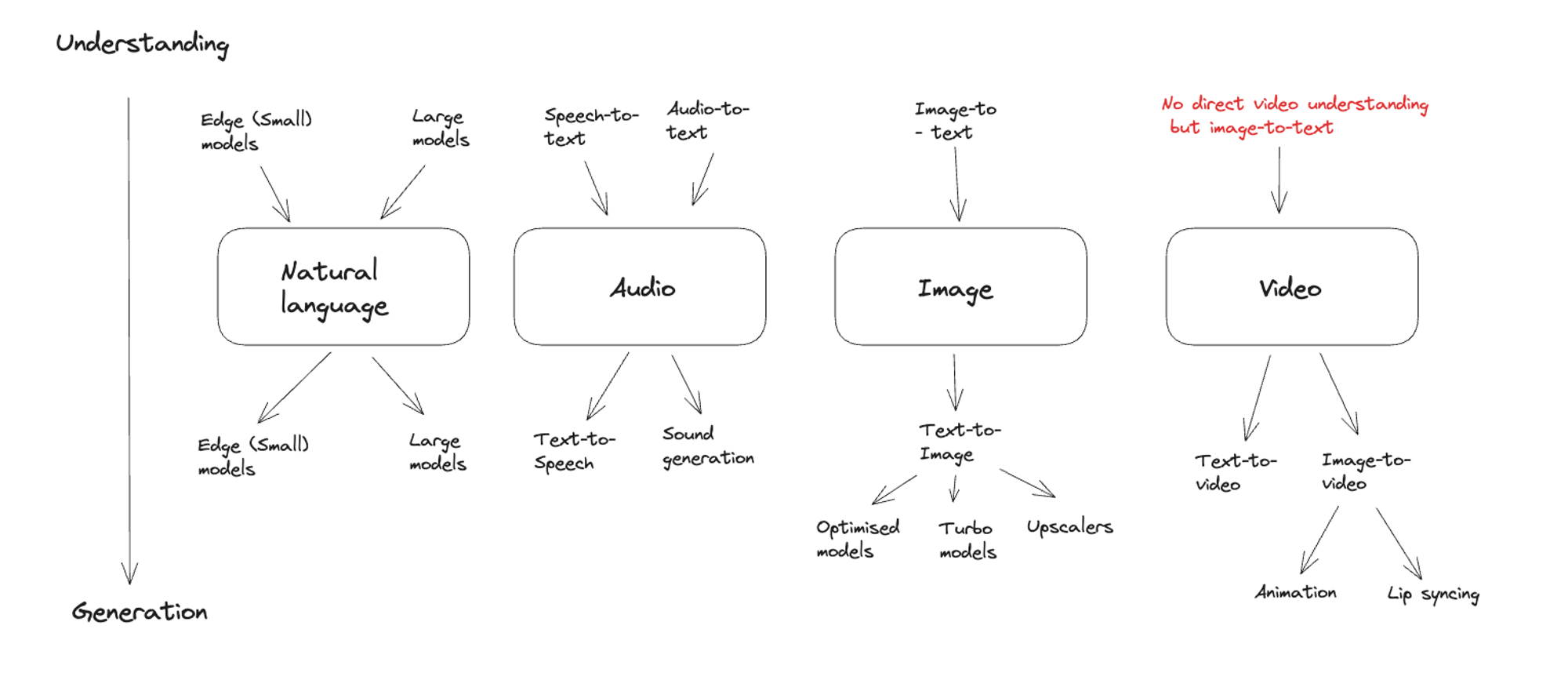

Today, the term “AI” encompasses a broad range of technologies. In the context of generative AI, it refers to “Foundational Models”—sophisticated systems capable of understanding and generating diverse forms of media, such as text, audio, images, and video.

The foundational models are showcased in this diagram that breaks down AI capabilities into two principal functions: Understanding and Generation.

Within these overarching categories, there exist nuanced subcategories tailored to specific media forms, ranging from speech generation to lip-syncing, each reflecting the depth and versatility of AI’s potential applications.

It is important to understand that AI is not a monolithic entity but a synergy of various models, highlighting the growing importance of “multi-modality” in AI development.

Evolving Resource Requirements of AI

As AI becomes more ubiquitous, the evolution of its resource requirements takes center stage. Companies are now navigating the trade-offs between model size and computing location. For instance, Apple champions the development of smaller, localized models that prioritize privacy and reduce dependency on cloud-based services. These models are designed to run efficiently on consumer devices, bringing AI capabilities directly into the hands of users.

Conversely, organizations like OpenAI are at the forefront of large model innovation. These substantial models, often cloud-based, are equipped with remarkable capabilities due to their vast data processing power and intricate neural networks. While they require significant computational resources, they also set new benchmarks for what AI can achieve.

Key Takeaways

- We did not yet achieve AGI, but looks like we are almost there in Competent and Expert-level very soon.

- Multi-modality is rapidly evolving. We’re not only improving our understanding of text, audio, images, and video but also enhancing our ability to generate them creatively.

- The race between open-source and closed-source models is heating up, but it’s unclear if open-source models can match their proprietary counterparts.

- Factors to consider include the resources required for model training and the contributions of companies pushing open-source boundaries.

- Compact models designed for edge devices (such as smartphones or smart glasses) are gaining remarkable capabilities.

In the next chapter, we’ll explore the diverse landscape of companies and institutions shaping the future of foundational models, both in commercial and open-source domains.