Recursive LLMs

Last week was a thrilling week for the world of AI, and I had two significant realizations that I want to share with you.

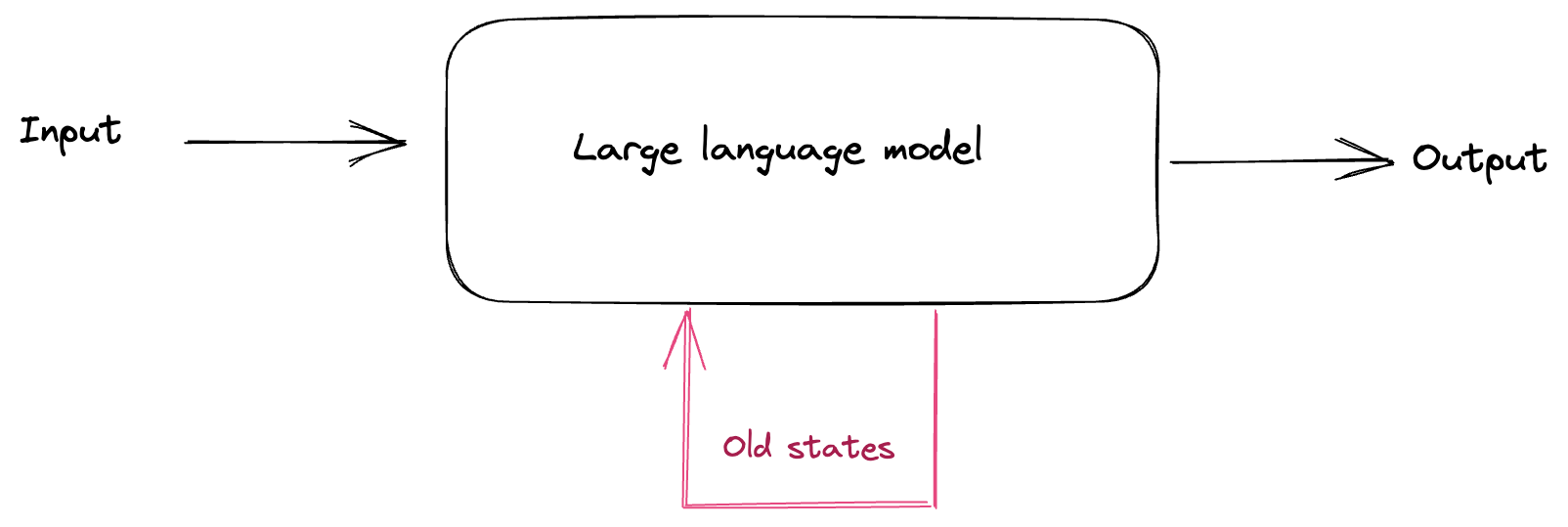

Realisation 1: Language Models (LLMs) are stateful and become more powerful with more context.

Most software we use is stateless, meaning that each time we open an app, it behaves almost the same way and stores minimal information about our past. This is easier for engineers, but it is limiting. On the other hand, imagine a computer game where your character evolves over time. When you reopen the game, you start from where you left off with your coins and weapons intact.

LLMs are similar to games in that they are stateful, meaning they can send the history of our conversations each time and respond based on the previous context.

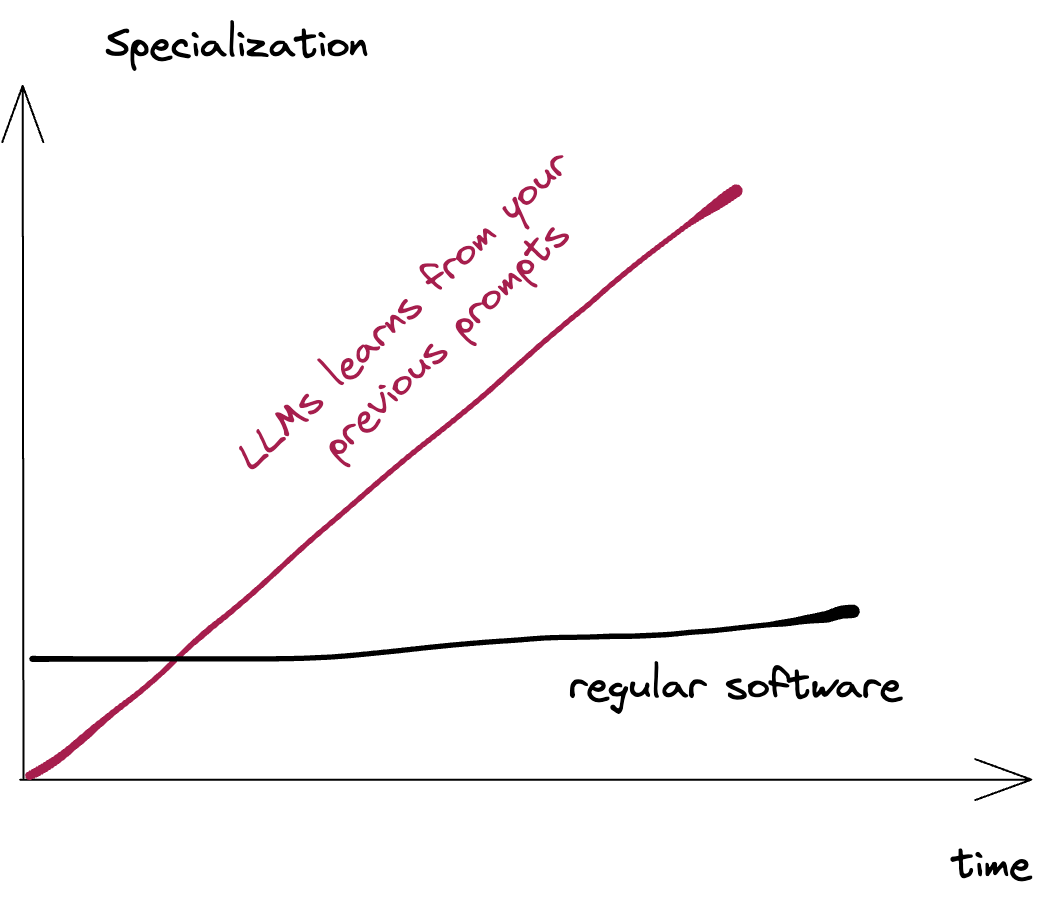

This makes LLMs incredibly powerful. They become more specialized on your context as you feed them over time. For example, if you want ChatGPT to be your growth hacker, you can tell it about your competitors, different strategies you’ve tried, and your monthly numbers. As you feed it more data, it starts to understand your specific context and provides personalized solutions.

Realisation 2: Since LLMs are stateful, multiple ones interacting with each other can create something even more powerful.

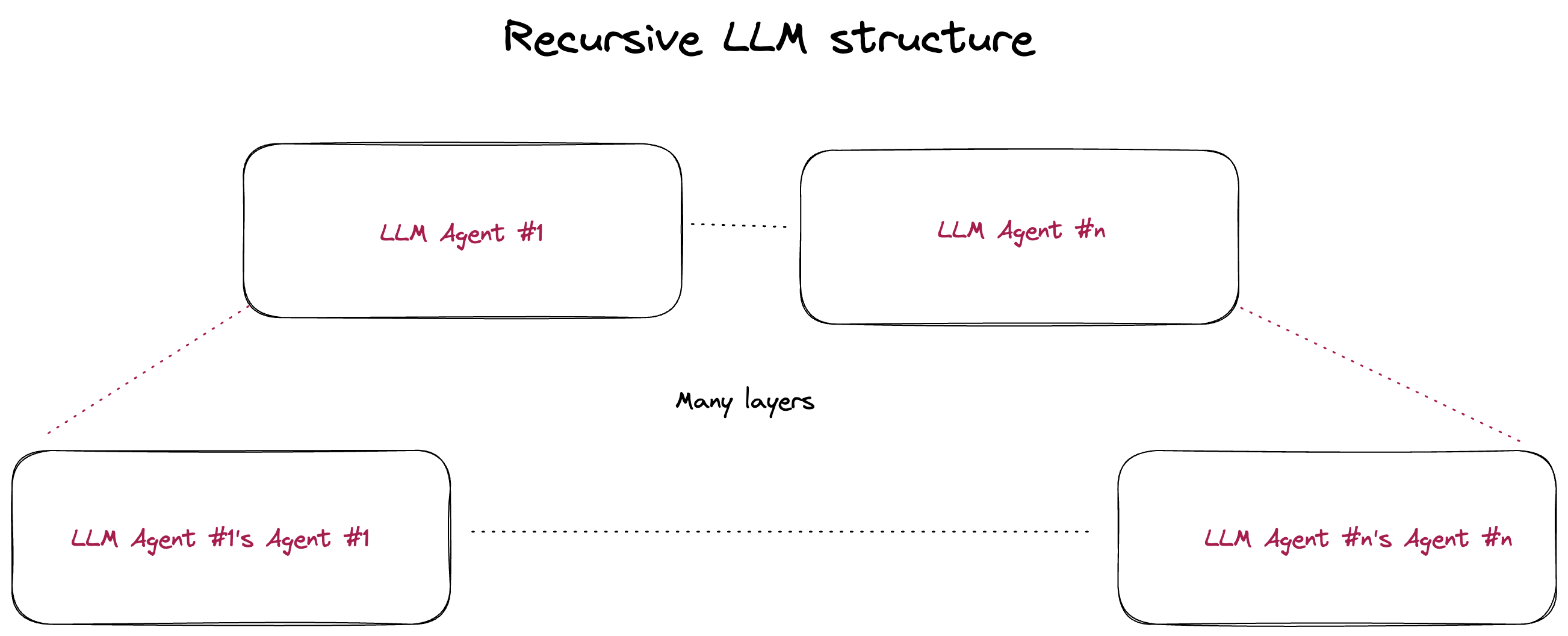

Long-term usage of LLMs is leading us to our next exciting topic: what happens when we combine multiple long-term LLMs together and let them interact or even control each other? 🤔

This idea has been buzzing on Twitter since last Wednesday, with two exciting projects catching everyone’s attention: AutoGPT and BabyAGI. Those two systems, AutoGPT and BabyAGI, have already shown that multiple GPT agents can interact with each other and accomplish complex tasks.

Here’s a mind-blowing thought:

what if we create a recursion of LLMs that have their own LLMs? It’s like LLMs within LLMs within LLMs, like a Russian doll of AI! The possibilities are endless. Imagine the power of such a system!

It’s funny how this idea was right in front of us all along, but it took these projects to make it click. For me, two things helped me see the potential of combining LLMs:

-

Back in computer science classes, we learned about k-Turing machines, which are able to solve any theoretical P problem. AutoGPT and BabyAGI can be thought of as k-Turing machines, where multiple LLMs work together to solve complex problems.

-

There’s a Rick and Morty episode that explores the idea of recursion and small swarms collaborating to accomplish tasks and even create their own workers. Watching this episode made me realize the potential of combining multiple LLMs to work together in a similar way.

If you haven’t seen the Rick and Morty episode, I highly recommend it. It’s a great way to understand the power of collaboration and recursion in creating complex systems. And if you’re interested in exploring the potential of AutoGPT and BabyAGI, be sure to check out their GitHub pages.

Here are my two cents on this exciting topic:

- Stateful LLMs are the way to go! The real world is complex and highly stateful, so it makes sense that LLMs with stateful capabilities can handle complex problems like a pro.

- The idea of combining multiple LLMs to create something even more powerful is just like how we humans create civilizations! We work together and orchestrate different people and resources to achieve something great. It’s the way to go!

This is it folks, we may have just witnessed the first signs of real “AI”! Who knows where this will take us, but it’s definitely worth keeping an eye on.

🎩 The ideas and frameworks are mine, but article is partially written with support of GPT-4.