The UX of the personal AI

This week, two news stories hinted that a dream of mine might be on the verge of becoming a reality.

Firstly, reports surfaced that OpenAI is collaborating with Jony Ive, previously of Apple, on an innovative device designed to elevate AI interactions. This development isn’t entirely surprising. I had anticipated it as early as March, as mentioned in my initial article on OpenAI and their ChatGPT. Although it was foreseeable that OpenAI would delve into hardware, the involvement of Jony Ive and additional investments from Softbank make the news even more thrilling.

Secondly, Meta has unveiled a new AI assistant alongside an updated version of Meta Smart Glasses. While the RayBan glasses might not boast cutting-edge AI capabilities right now, focusing more on spatial video recording, it’s evident that future iterations will integrate enhanced AI features.

In light of these advancements, two pivotal questions arise:

- How should this new device enable interaction with personal AI?

- What constitutes an excellent user experience for personal AI?

The Ideal Device for Interaction?

Recall the 2013 film “Her.” Theodore Twombly communicated with Samantha, the AI, using wireless headphones. Interestingly, this depiction predates the release of AirPods by three years.

Inspired by the movie, one might assume that simple headphones tethered to a phone might suffice for comprehensive AI interaction. I predict that the inaugural version of OpenAI’s device won’t deviate substantially from AirPods. The question is, will it pair directly with phones?

This design has inherent limitations. For those who envision an AI akin to Iron Man’s Jarvis, incorporating visual data is essential for full contextual understanding. I’m skeptical that the first iteration from OpenAI will address this. Moreover, processing visual data drains batteries and recording individuals ignoring privacy, leading to several complex challenges.

What Defines Optimal UX for Personal AI?

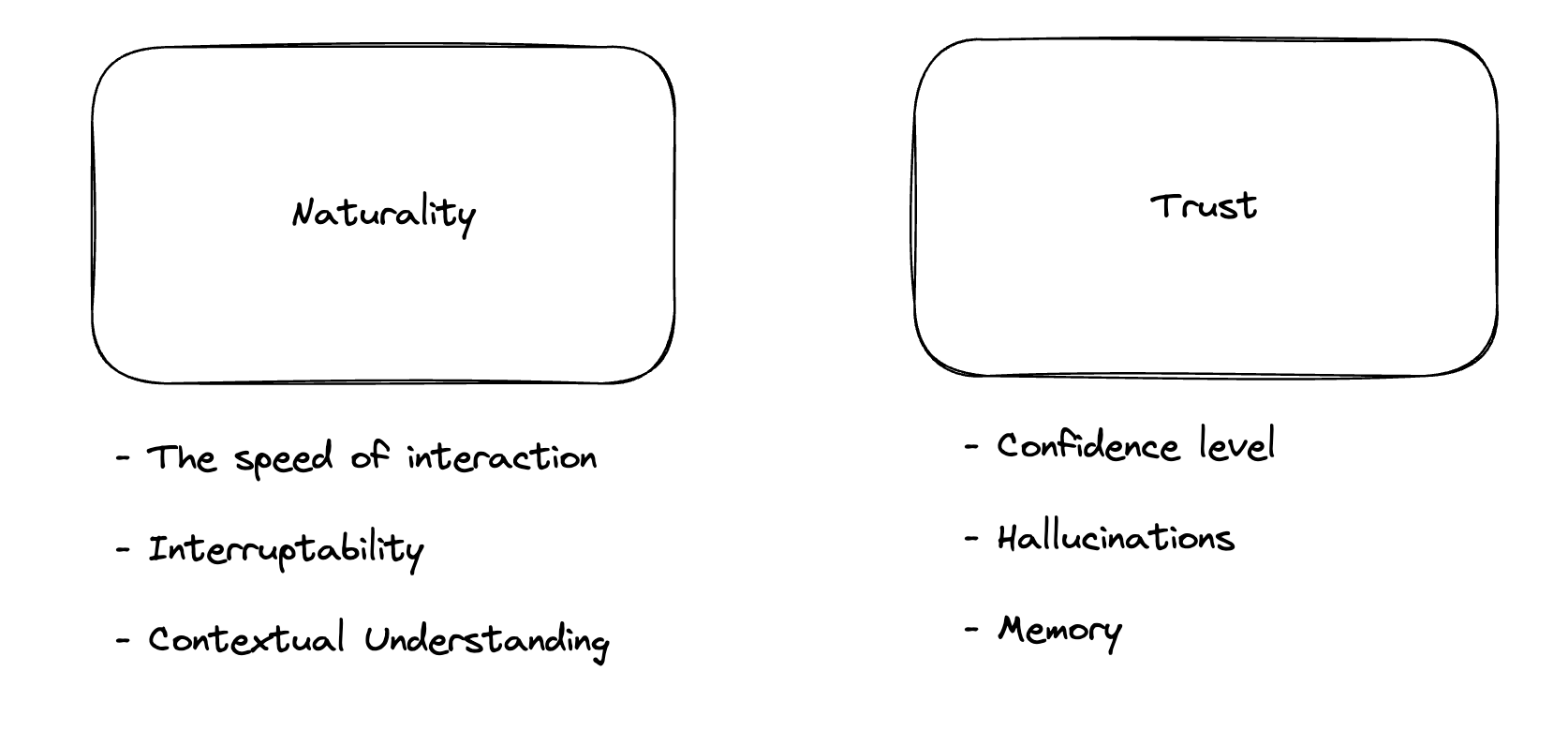

The essence of this question revolves around two core principles: naturality and trust.

Let’s explore both dimensions.

Naturality

In 2023, our conversations are primarily with living entities. Interacting with adults, children, babies, or even pets , we can somewhat predict responses, tailoring our dialogue accordingly. In contrast, our commands to inanimate objects are basic, like instructing Alexa to switch on the lights.

However, with recent advancements in LLMs, this dynamic is shifting. For the first time, machines can engage in genuine “conversations.” Although textual interactions feel rudimentary, verbal communication with AI will be transformative, especially given OpenAI’s recent announcement about ChatGPT’s vocal response capabilities.

But, what elements make verbal exchanges feel authentic?

A genuine conversation is characterized by its rhythm and the subjects discussed. Current AI interactions face three primary UX challenges:

-

Speed of Interaction: A quick response time is vital to maintain engagement in AI dialogues. Slow replies can disrupt the conversational flow. As highlighted by a study from Princeton, swift responses strengthen social bonds during exchanges.

-

Interruptability: People often interrupt each other to seek clarity or share insights. While this can be perceived as disruptive, it can also enrich discussions. Current interactions with ChatGPT can sometimes yield verbose responses. As auditory processing is slower than visual, this can be problematic. Users should seamlessly interrupt their AI, which should adapt promptly.

-

Contextual Conversations: Conversations are underpinned by context. It’s not just about the subject but also the backdrop, desired outcome, and available time and space. Current interactions with personal AI often lack these nuances.

Trust

Establishing trust is paramount for meaningful conversations. Trust takes time to build, but once shattered, it’s arduous to mend. In the realm of AI, I identify three significant challenges:

-

Confidence Level: LLMs often exude unwavering confidence, even when they shouldn’t. Their inability to gauge their own accuracy can erode our trust.

-

Hallucinations: A byproduct of LLM’s training is their tendency to produce irrelevant or nonsensical outputs, which can hamper serious conversations.

-

Memory Limitations: Currently, LLMs like ChatGPT struggle with maintaining conversational history. This lack of continuity hinders personalization and trust. Companies like Anthropic’s Claude are working to address this issue, but there’s a long way to go.

In Conclusion

In the coming 24 months, AI firms will focus on enhancing personal AI’s UX. Rapid advancements in ultra-realistic avatars, text-to-speech technology, and AI will usher us into a new epoch: a symbiosis of humans and AI.

It seems we’re on the cusp of interacting with real-world NPCs (a nod to my fellow gamers 👋🏼).

Key Takeaways:

- We’re likely to see AI assistants integrated into AirPods within the next 24 months, but several UX hurdles remain.

- Addressing these challenges will require a blend of human-centric and technological solutions.

🎩 The ideas and frameworks are mine, but article is partially written with support of GPT-4.